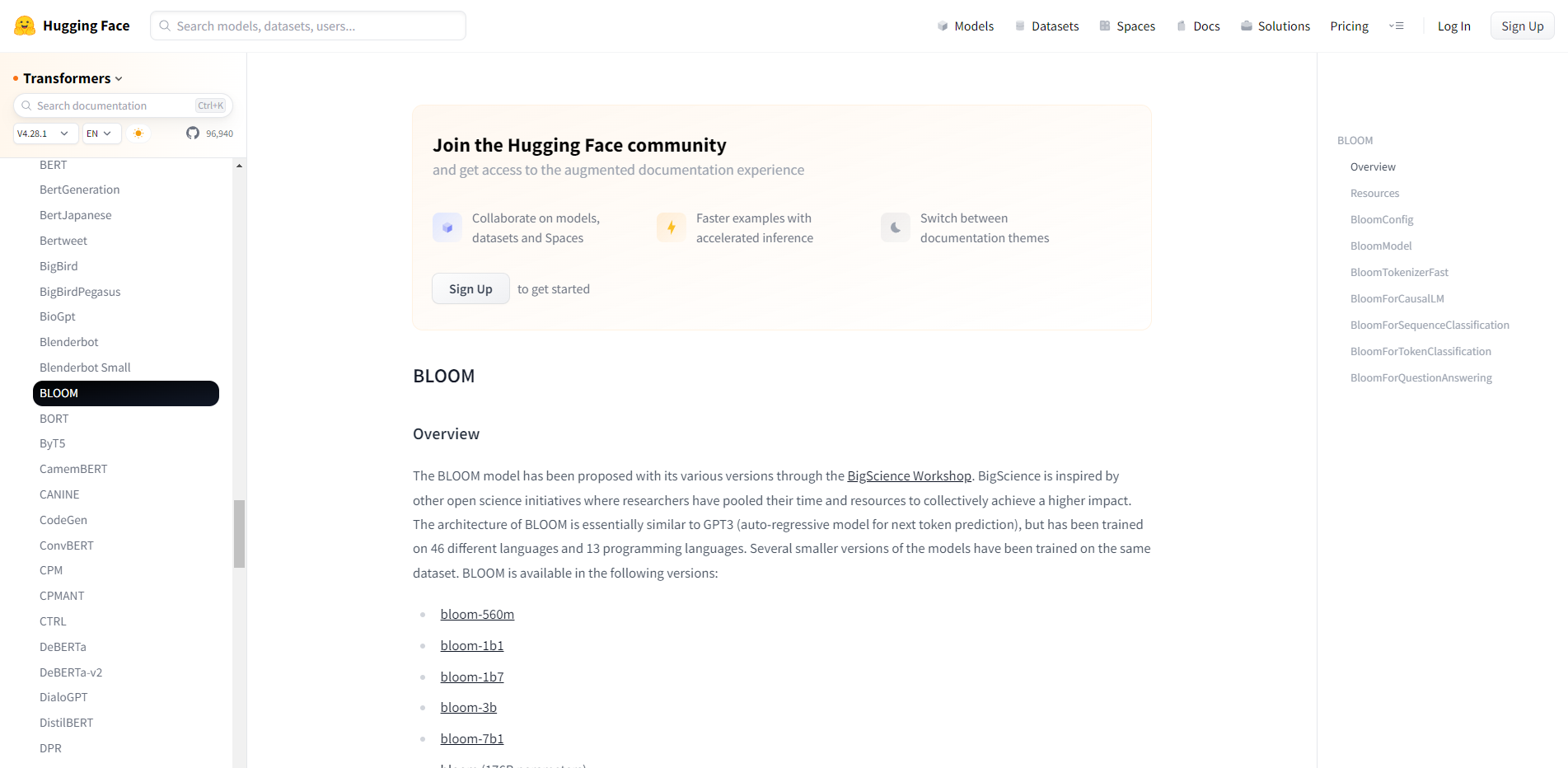

BLOOM 模型已经通过BigScience Workshop提出了各种版本。BigScience 受到其他开放科学计划的启发,在这些计划中,研究人员汇集了他们的时间和资源以共同取得更大的影响。BLOOM 的架构本质上类似于 GPT3(用于下一个令牌预测的自回归模型),但是已经在 46 种不同的语言和 13 种编程语言上进行了训练。几个较小版本的模型已经在同一数据集上进行了训练。BLOOM 有以下版本:

资源

官方 Hugging Face 和社区(由 🌎 表示)资源列表,可帮助您开始使用 BLOOM。如果您有兴趣提交要包含在此处的资源,请随时打开合并请求,我们将对其进行审核!理想情况下,该资源应该展示一些新的东西,而不是复制现有资源。

也可以看看:

⚡️ 推理

- 关于优化故事的博客:Bloom 推理。

- 关于使用 DeepSpeed 和 Accelerate 进行难以置信的快速 BLOOM 推理的博客。

⚙️培训

布隆配置

类 变压器。布隆配置

( vocab_size = 250880hidden_size = 64n_layer = 2n_head = 8layer_norm_epsilon = 1e-05initializer_range = 0.02use_cache = Truebos_token_id = 1eos_token_id = 2apply_residual_connection_post_layernorm = Falsehidden_dropout = 0.0attention_dropout = 0.0pre training_tp = 1slow_but_exact = False**kwargs )

参数

- vocab_size (

int, optional , defaults to 250880) — Bloom 模型的词汇表大小。定义调用BloomModelinputs_ids时传递的可以表示的不同标记的最大数量。查看有关如何 定义的讨论。vocab_size - hidden_size (

int, optional , defaults to 64) — 嵌入和隐藏状态的维数。 - n_layer (

int, optional , defaults to 2) — Transformer 编码器中的隐藏层数。 - n_head (

int, optional , defaults to 8) — Transformer 编码器中每个注意层的注意头数。 - layer_norm_epsilon (

float, optional , defaults to 1e-5) — 在层归一化层中使用的 epsilon。 - initializer_range (

float, optional , defaults to 0.02) — 用于初始化所有权重矩阵的 truncated_normal_initializer 的标准差。 - apply_residual_connection_post_layenorm (

bool, optional , defaults toFalse) — 如果启用,则使用隐藏状态的层范数作为变压器块中的残差 - hidden_dropout (

float, optional , defaults to 0.1) — 偏差丢弃时丢弃函数的丢弃率。 - attention_dropout (

float, optional , defaults to 0.1) — 应用于注意力概率的丢弃率 - use_cache (

bool, optional , defaults toTrue) — 模型是否应返回最后的键/值注意事项(并非所有模型都使用)。 - pretraining_tp (

int, optional, defaults to1) — Experimental feature. Tensor parallelism rank used during pretraining with Megatron. Please refer to this document to understand more about it. This value is necessary to ensure exact reproducibility of the pretraining results. Please refer to this issue. Note also that this is enabled only whenslow_but_exact=True. - slow_but_exact (

bool, optional, defaults toFalse) — Experimental feature. Whether to use slow but exact implementation of the attention mechanism. While merging the TP rank tensors, due to slicing operations the results may be slightly different between the model trained on Megatron and our model. Please refer to this issue. A solution to obtain more accurate results is to enable this feature. Enabling this will hurt the computational time of the inference. Will be probably resolved in the future once the main model has been fine-tuned with TP_rank=1.

这是存储BloomModel配置的配置类。它用于根据指定的参数实例化 Bloom 模型,定义模型架构。使用默认值实例化配置将产生与 Bloom 架构 bigscience/bloom类似的配置。

配置对象继承自PretrainedConfig,可用于控制模型输出。阅读PretrainedConfig中的文档以获取更多信息。

布隆模型

类 变压器。布隆模型

( 配置:BloomConfig )

参数

- config ( BloomConfig ) — 具有模型所有参数的模型配置类。使用配置文件初始化不会加载与模型关联的权重,只会加载配置。查看from_pretrained()方法加载模型权重。

裸露的 Bloom 模型转换器输出原始隐藏状态,顶部没有任何特定的头部。

该模型继承自PreTrainedModel。检查超类文档以了解库为其所有模型实现的通用方法(例如下载或保存、调整输入嵌入的大小等)

这个模型也是 PyTorch torch.nn.Module 的子类。将其用作常规 PyTorch 模块,并参考 PyTorch 文档以了解与一般用法和行为相关的所有事项。

向前

( input_ids : typing.Optional[torch.LongTensor] = Nonepast_key_values : typing.Union[typing.Tuple[typing.Tuple[torch.Tensor, torch.Tensor], …], NoneType] = Noneattention_mask : typing.Optional [torch.Tensor] = 无head_mask : typing.Optional[torch.LongTensor] = Noneinputs_embeds : typing.Optional[torch.LongTensor] = Noneuse_cache : typing.Optional[bool] = None无output_hidden_states : typing.Optional[bool] = Nonereturn_dict : typing.Optional[bool] = None**deprecated_arguments ) →transformers.modeling_outputs.BaseModelOutputWithPastAndCrossAttentions或tuple(torch.FloatTensor)

参数

- input_ids (

torch.LongTensorof shape(batch_size, input_ids_length)) —input_ids_length=sequence_lengthifpast_key_valuesisNoneelsepast_key_values[0][0].shape[2](sequence_lengthof input past key value states)。词汇表中输入序列标记的索引。如果

past_key_values被使用,只有input_ids那些没有计算过他们的过去应该作为传递input_ids。可以使用AutoTokenizer获取索引。请参阅PreTrainedTokenizer.encode()和 PreTrainedTokenizer。致电()了解详情。

- past_key_values (

Tuple[Tuple[torch.Tensor]]of lengthconfig.n_layers) — 包含由模型计算的预先计算的隐藏状态(注意块中的键和值)(见past_key_values下面的输出)。可用于加速顺序解码。过去input_ids赋予此模型的 不应传递,因为input_ids它们已经被计算过。每个元素

past_key_values都是一个元组(past_key, past_value):- past_key: [batch_size * num_heads, head_dim, kv_length]

- past_value: [batch_size * num_heads, kv_length, head_dim]

- attention_mask (

torch.FloatTensorof shape(batch_size, sequence_length), optional ) — 避免对填充标记索引执行注意力的掩码。在以下位置选择的掩码值[0, 1]:- 1 对于未屏蔽的标记,

- 0 for tokens that are masked.

- head_mask (

torch.FloatTensorof shape(num_heads,)or(num_layers, num_heads), optional) — Mask to nullify selected heads of the self-attention modules. Mask values selected in[0, 1]:- 1 indicates the head is not masked,

- 0 indicates the head is masked.

- inputs_embeds (

torch.FloatTensorof shape(batch_size, sequence_length, hidden_size), optional) — Optionally, instead of passinginput_idsyou can choose to directly pass an embedded representation. This is useful if you want more control over how to convertinput_idsindices into associated vectors than the model’s internal embedding lookup matrix.If

past_key_valuesis used, optionally only the lastinputs_embedshave to be input (seepast_key_values). - use_cache (

bool, optional) — If set toTrue,past_key_valueskey value states are returned and can be used to speed up decoding (seepast_key_values). - output_attentions (

bool, optional) — Whether or not to return the attentions tensors of all attention layers. Seeattentionsunder returned tensors for more detail. - output_hidden_states (

bool, optional) — Whether or not to return the hidden states of all layers. Seehidden_statesunder returned tensors for more detail. - return_dict (

bool, optional) — Whether or not to return a ModelOutput instead of a plain tuple.

Returns

transformers.modeling_outputs.BaseModelOutputWithPastAndCrossAttentions or tuple(torch.FloatTensor)

A transformers.modeling_outputs.BaseModelOutputWithPastAndCrossAttentions or a tuple of torch.FloatTensor (if return_dict=False is passed or when config.return_dict=False) comprising various elements depending on the configuration (BloomConfig) and inputs.

- last_hidden_state (

torch.FloatTensorof shape(batch_size, sequence_length, hidden_size)) — Sequence of hidden-states at the output of the last layer of the model.If

past_key_valuesis used only the last hidden-state of the sequences of shape(batch_size, 1, hidden_size)is output. - past_key_values (

tuple(tuple(torch.FloatTensor)), optional, returned whenuse_cache=Trueis passed or whenconfig.use_cache=True) — Tuple oftuple(torch.FloatTensor)of lengthconfig.n_layers, with each tuple having 2 tensors of shape(batch_size, num_heads, sequence_length, embed_size_per_head)) and optionally ifconfig.is_encoder_decoder=True2 additional tensors of shape(batch_size, num_heads, encoder_sequence_length, embed_size_per_head).Contains pre-computed hidden-states (key and values in the self-attention blocks and optionally if

config.is_encoder_decoder=Truein the cross-attention blocks) that can be used (seepast_key_valuesinput) to speed up sequential decoding. - hidden_states (

tuple(torch.FloatTensor), optional, returned whenoutput_hidden_states=Trueis passed or whenconfig.output_hidden_states=True) — Tuple oftorch.FloatTensor(one for the output of the embeddings, if the model has an embedding layer, + one for the output of each layer) of shape(batch_size, sequence_length, hidden_size).Hidden-states of the model at the output of each layer plus the optional initial embedding outputs.

- attentions (

tuple(torch.FloatTensor), optional, returned whenoutput_attentions=Trueis passed or whenconfig.output_attentions=True) — Tuple oftorch.FloatTensor(one for each layer) of shape(batch_size, num_heads, sequence_length, sequence_length).Attentions weights after the attention softmax, used to compute the weighted average in the self-attention heads.

- cross_attentions (

tuple(torch.FloatTensor), optional, returned whenoutput_attentions=Trueandconfig.add_cross_attention=Trueis passed or whenconfig.output_attentions=True) — Tuple oftorch.FloatTensor(one for each layer) of shape(batch_size, num_heads, sequence_length, sequence_length).Attentions weights of the decoder’s cross-attention layer, after the attention softmax, used to compute the weighted average in the cross-attention heads.

BloomModel forward 方法覆盖了特殊__call__方法。

尽管前向传递的配方需要在此函数中定义,但应该Module 在之后调用实例而不是这个,因为前者负责运行预处理和后处理步骤,而后者默默地忽略它们。

已复制

>>> from transformers import AutoTokenizer, BloomModel

>>>导入火炬

>>> tokenizer = AutoTokenizer.from_pretrained( "bigscience/bloom-560m" )

>>> model = BloomModel.from_pretrained( "bigscience/bloom-560m" )

>>> inputs = tokenizer( "你好,我的狗很可爱" , return_tensors= "pt" )

>>> outputs = model(**inputs)

>>> last_hidden_states = 输出.last_hidden_state

BloomTokenizerFast

类 变压器。BloomTokenizerFast

( vocab_file = nonemerges_file = nonetokenizer_file = noneunk_token =”bos_token ='<s>’ eos_token = ‘ eos_token ='</s> ‘pad_token =‘ pad_token =‘ <pad>

参数

- vocab_file (

str) — 词汇文件的路径。 - merges_file (

str) — 合并文件的路径。 - errors (

str, optional , defaults to"replace") — 将字节解码为 UTF-8 时要遵循的范例。有关详细信息,请参阅 bytes.decode 。 - unk_token (

str, optional , defaults to<|endoftext|>) — 未知令牌。不在词汇表中的标记无法转换为 ID,而是设置为此标记。 - bos_token (

str, optional , defaults to<|endoftext|>) — 序列令牌的开头。 - eos_token (

str, optional , defaults to<|endoftext|>) — 序列标记的结尾。 - add_prefix_space (

bool, optional , defaults toFalse) — 是否向输入添加初始空格。这允许像对待任何其他词一样对待前导词。(Bloom 标记器通过前面的空格检测单词的开头)。 - trim_offsets (

bool, optional , defaults toTrue) — 后处理步骤是否应修剪偏移量以避免包含空格。

构造一个“快速”的 Bloom 分词器(由 HuggingFace 的分词器库支持)。基于字节级字节对编码。

这个标记器已经过训练,可以像标记的一部分一样处理空格(有点像句子),所以一个词会

已复制

>>>从变形金刚导入BloomTokenizerFast

>>> tokenizer = BloomTokenizerFast.from_pretrained( "bigscience/bloom" )

>>> tokenizer( "Hello world" )[ "input_ids" ]

[ 59414 , 8876 ]

>>> tokenizer( "Hello world" )[ "input_ids" ]

[ 86153 , 8876 ]

您可以在实例化此分词器时通过传递来避免该行为add_prefix_space=True,但由于模型未以这种方式进行预训练,因此可能会降低性能。

与 一起使用时is_split_into_words=True,此分词器需要与 一起实例化add_prefix_space=True。

此分词器继承自PreTrainedTokenizerFast,其中包含大部分主要方法。用户应参考该超类以获取有关这些方法的更多信息。

因果绽放LM

类 变压器。因果绽放LM

( 配置:BloomConfig )

参数

- config ( BloomConfig ) — 具有模型所有参数的模型配置类。使用配置文件初始化不会加载与模型关联的权重,只会加载配置。查看from_pretrained()方法加载模型权重。

顶部带有语言建模头的 Bloom 模型转换器(权重与输入嵌入相关的线性层)。

该模型继承自PreTrainedModel。检查超类文档以了解库为其所有模型实现的通用方法(例如下载或保存、调整输入嵌入的大小等)

这个模型也是 PyTorch torch.nn.Module 的子类。将其用作常规 PyTorch 模块,并参考 PyTorch 文档以了解与一般用法和行为相关的所有事项。

向前

( input_ids : typing.Optional[torch.LongTensor] = Nonepast_key_values : typing.Union[typing.Tuple[typing.Tuple[torch.Tensor, torch.Tensor], …], NoneType] = Noneattention_mask : typing.Optional [torch.Tensor] = 无head_mask : typing.Optional[torch.Tensor] = Noneinputs_embeds : typing.Optional[torch.Tensor] = Nonelabels : typing.Optional[ torch.Tensor ] = None ] = 无output_attentions :打字。可选[bool] = 无output_hidden_ states :打字。可选[bool] =无→ transformers.modeling_outputs.CausalLMOutputWithCrossAttentions或tuple(torch.FloatTensor)

参数

- input_ids (

torch.LongTensorof shape(batch_size, input_ids_length)) —input_ids_length=sequence_lengthifpast_key_valuesisNoneelsepast_key_values[0][0].shape[2](sequence_lengthof input past key value states)。词汇表中输入序列标记的索引。如果

past_key_values被使用,只有input_ids那些没有计算过他们的过去应该作为传递input_ids。可以使用AutoTokenizer获取索引。请参阅PreTrainedTokenizer.encode()和 PreTrainedTokenizer。致电()了解详情。

- past_key_values (

Tuple[Tuple[torch.Tensor]]of lengthconfig.n_layers) — 包含由模型计算的预先计算的隐藏状态(注意块中的键和值)(见past_key_values下面的输出)。可用于加速顺序解码。过去input_ids赋予此模型的 不应传递,因为input_ids它们已经被计算过。每个元素

past_key_values都是一个元组(past_key, past_value):- past_key: [batch_size * num_heads, head_dim, kv_length]

- past_value: [batch_size * num_heads, kv_length, head_dim]

- attention_mask (

torch.FloatTensorof shape(batch_size, sequence_length), optional ) — 避免对填充标记索引执行注意力的掩码。在以下位置选择的掩码值[0, 1]:- 1 对于未屏蔽的标记,

- 0 for tokens that are masked.

- head_mask (

torch.FloatTensorof shape(num_heads,)or(num_layers, num_heads), optional) — Mask to nullify selected heads of the self-attention modules. Mask values selected in[0, 1]:- 1 indicates the head is not masked,

- 0 indicates the head is masked.

- inputs_embeds (

torch.FloatTensorof shape(batch_size, sequence_length, hidden_size), optional) — Optionally, instead of passinginput_idsyou can choose to directly pass an embedded representation. This is useful if you want more control over how to convertinput_idsindices into associated vectors than the model’s internal embedding lookup matrix.If

past_key_valuesis used, optionally only the lastinputs_embedshave to be input (seepast_key_values). - use_cache (

bool, optional) — If set toTrue,past_key_valueskey value states are returned and can be used to speed up decoding (seepast_key_values). - output_attentions (

bool, optional) — Whether or not to return the attentions tensors of all attention layers. Seeattentionsunder returned tensors for more detail. - output_hidden_states (

bool, optional) — Whether or not to return the hidden states of all layers. Seehidden_statesunder returned tensors for more detail. - return_dict (

bool, optional) — Whether or not to return a ModelOutput instead of a plain tuple. - labels (

torch.LongTensorof shape(batch_size, sequence_length), optional) — Labels for language modeling. Note that the labels are shifted inside the model, i.e. you can setlabels = input_idsIndices are selected in[-100, 0, ..., config.vocab_size]All labels set to-100are ignored (masked), the loss is only computed for labels in[0, ..., config.vocab_size]

Returns

transformers.modeling_outputs.CausalLMOutputWithCrossAttentions or tuple(torch.FloatTensor)

A transformers.modeling_outputs.CausalLMOutputWithCrossAttentions or a tuple of torch.FloatTensor (if return_dict=False is passed or when config.return_dict=False) comprising various elements depending on the configuration (BloomConfig) and inputs.

- loss (

torch.FloatTensorof shape(1,), optional, returned whenlabelsis provided) — Language modeling loss (for next-token prediction). - logits (

torch.FloatTensorof shape(batch_size, sequence_length, config.vocab_size)) — Prediction scores of the language modeling head (scores for each vocabulary token before SoftMax). - hidden_states (

tuple(torch.FloatTensor), optional, returned whenoutput_hidden_states=Trueis passed or whenconfig.output_hidden_states=True) — Tuple oftorch.FloatTensor(one for the output of the embeddings, if the model has an embedding layer, + one for the output of each layer) of shape(batch_size, sequence_length, hidden_size).Hidden-states of the model at the output of each layer plus the optional initial embedding outputs.

- attentions (

tuple(torch.FloatTensor), optional, returned whenoutput_attentions=Trueis passed or whenconfig.output_attentions=True) — Tuple oftorch.FloatTensor(one for each layer) of shape(batch_size, num_heads, sequence_length, sequence_length).Attentions weights after the attention softmax, used to compute the weighted average in the self-attention heads.

- cross_attentions (

tuple(torch.FloatTensor), optional, returned whenoutput_attentions=Trueis passed or whenconfig.output_attentions=True) — Tuple oftorch.FloatTensor(one for each layer) of shape(batch_size, num_heads, sequence_length, sequence_length).Cross attentions weights after the attention softmax, used to compute the weighted average in the cross-attention heads.

- past_key_values (

tuple(tuple(torch.FloatTensor)), optional, returned whenuse_cache=Trueis passed or whenconfig.use_cache=True) — Tuple oftorch.FloatTensortuples of lengthconfig.n_layers, with each tuple containing the cached key, value states of the self-attention and the cross-attention layers if model is used in encoder-decoder setting. Only relevant ifconfig.is_decoder = True.包含预先计算的隐藏状态(注意块中的键和值),可用于(参见

past_key_values输入)加速顺序解码。

BloomForCausalLM转发方法,覆盖__call__特殊方法。

尽管前向传递的配方需要在此函数中定义,但应该Module 在之后调用实例而不是这个,因为前者负责运行预处理和后处理步骤,而后者默默地忽略它们。

已复制

>>>进口手电筒

>>>从变压器进口AutoTokenizer, BloomForCausalLM

>>> tokenizer = AutoTokenizer.from_pretrained( "bigscience/bloom-560m" )

>>> model = BloomForCausalLM.from_pretrained( "bigscience/bloom-560m" )

>>> inputs = tokenizer( "你好,我的狗很可爱" , return_tensors= "pt" )

>>> outputs = model(**inputs, labels=inputs[ "input_ids" ])

>>> loss = outputs.loss

>>> logits = outputs.logits

布隆序列分类

类 变压器。布隆序列分类

( 配置:BloomConfig )

参数

- config ( BloomConfig ) — 具有模型所有参数的模型配置类。使用配置文件初始化不会加载与模型关联的权重,只会加载配置。查看from_pretrained()方法加载模型权重。

顶部(线性层)带有序列分类头的 Bloom 模型转换器。

BloomForSequenceClassification使用最后一个标记来进行分类,就像其他因果模型(例如 GPT-1)所做的那样。

由于它对最后一个标记进行分类,因此需要知道最后一个标记的位置。如果 pad_token_id在配置中定义了 a,它会在每行中找到最后一个不是填充标记的标记。如果没有pad_token_id定义,它只取批处理的每一行中的最后一个值。由于它无法猜测传递的填充标记 wheninputs_embeds代替input_ids,因此它会做同样的事情(取批处理每一行中的最后一个值)。

该模型继承自PreTrainedModel。检查超类文档以了解库为其所有模型实现的通用方法(例如下载或保存、调整输入嵌入的大小等)

这个模型也是 PyTorch torch.nn.Module 的子类。将其用作常规 PyTorch 模块,并参考 PyTorch 文档以了解与一般用法和行为相关的所有事项。

向前

( input_ids : typing.Optional[torch.LongTensor] = Nonepast_key_values : typing.Union[typing.Tuple[typing.Tuple[torch.Tensor, torch.Tensor], …], NoneType] = Noneattention_mask : typing.Optional [torch.Tensor] = 无head_mask : typing.Optional[torch.Tensor] = Noneinputs_embeds : typing.Optional[torch.Tensor] = Nonelabels : typing.Optional[ torch.Tensor ] = None ] = 无output_attentions :打字。可选[bool] = 无output_hidden_ states :打字。可选[bool] =无→ transformers.modeling_outputs.SequenceClassifierOutputWithPast或tuple(torch.FloatTensor)

参数

- input_ids (

torch.LongTensorof shape(batch_size, input_ids_length)) —input_ids_length=sequence_lengthifpast_key_valuesisNoneelsepast_key_values[0][0].shape[2](sequence_lengthof input past key value states)。词汇表中输入序列标记的索引。如果

past_key_values被使用,只有input_ids那些没有计算过他们的过去应该作为传递input_ids。可以使用AutoTokenizer获取索引。请参阅PreTrainedTokenizer.encode()和 PreTrainedTokenizer。致电()了解详情。

- past_key_values (

Tuple[Tuple[torch.Tensor]]of lengthconfig.n_layers) — 包含由模型计算的预先计算的隐藏状态(注意块中的键和值)(见past_key_values下面的输出)。可用于加速顺序解码。过去input_ids赋予此模型的 不应传递,因为input_ids它们已经被计算过。每个元素

past_key_values都是一个元组(past_key, past_value):- past_key: [batch_size * num_heads, head_dim, kv_length]

- past_value: [batch_size * num_heads, kv_length, head_dim]

- attention_mask (

torch.FloatTensorof shape(batch_size, sequence_length), optional ) — 避免对填充标记索引执行注意力的掩码。在以下位置选择的掩码值[0, 1]:- 1 对于未屏蔽的标记,

- 0 for tokens that are masked.

- head_mask (

torch.FloatTensorof shape(num_heads,)or(num_layers, num_heads), optional) — Mask to nullify selected heads of the self-attention modules. Mask values selected in[0, 1]:- 1 indicates the head is not masked,

- 0 indicates the head is masked.

- inputs_embeds (

torch.FloatTensorof shape(batch_size, sequence_length, hidden_size), optional) — Optionally, instead of passinginput_idsyou can choose to directly pass an embedded representation. This is useful if you want more control over how to convertinput_idsindices into associated vectors than the model’s internal embedding lookup matrix.If

past_key_valuesis used, optionally only the lastinputs_embedshave to be input (seepast_key_values). - use_cache (

bool, optional) — If set toTrue,past_key_valueskey value states are returned and can be used to speed up decoding (seepast_key_values). - output_attentions (

bool, optional) — Whether or not to return the attentions tensors of all attention layers. Seeattentionsunder returned tensors for more detail. - output_hidden_states (

bool, optional) — Whether or not to return the hidden states of all layers. Seehidden_statesunder returned tensors for more detail. - return_dict (

bool, optional) — Whether or not to return a ModelOutput instead of a plain tuple. - labels (

torch.LongTensorof shape(batch_size,), optional) — Labels for computing the sequence classification/regression loss. Indices should be in[0, ..., config.num_labels - 1]. Ifconfig.num_labels == 1a regression loss is computed (Mean-Square loss), Ifconfig.num_labels > 1a classification loss is computed (Cross-Entropy).

Returns

transformers.modeling_outputs.SequenceClassifierOutputWithPast or tuple(torch.FloatTensor)

A transformers.modeling_outputs.SequenceClassifierOutputWithPast or a tuple of torch.FloatTensor (if return_dict=False is passed or when config.return_dict=False) comprising various elements depending on the configuration (BloomConfig) and inputs.

- loss (

torch.FloatTensorof shape(1,), optional, returned whenlabelsis provided) — Classification (or regression if config.num_labels==1) loss. - logits (

torch.FloatTensorof shape(batch_size, config.num_labels)) — Classification (or regression if config.num_labels==1) scores (before SoftMax). - past_key_values (

tuple(tuple(torch.FloatTensor)), optional, returned whenuse_cache=Trueis passed or whenconfig.use_cache=True) — Tuple oftuple(torch.FloatTensor)of lengthconfig.n_layers, with each tuple having 2 tensors of shape(batch_size, num_heads, sequence_length, embed_size_per_head))Contains pre-computed hidden-states (key and values in the self-attention blocks) that can be used (see

past_key_valuesinput) to speed up sequential decoding. - hidden_states (

tuple(torch.FloatTensor), optional , returned whenoutput_hidden_states=Trueis passed or whenconfig.output_hidden_states=True) — shape 的元组torch.FloatTensor(一个用于嵌入的输出,如果模型有一个嵌入层,+ 一个用于每个层的输出)(batch_size, sequence_length, hidden_size)。每层输出的模型隐藏状态加上可选的初始嵌入输出。

- attentions (

tuple(torch.FloatTensor), optional , returned whenoutput_attentions=Trueis passed or whenconfig.output_attentions=True) — 形状的元组torch.FloatTensor(每层一个)(batch_size, num_heads, sequence_length, sequence_length)。注意 softmax 之后的注意权重,用于计算自注意头中的加权平均值。

BloomForSequenceClassification转发方法,覆盖__call__特殊方法。

尽管前向传递的配方需要在此函数中定义,但应该Module 在之后调用实例而不是这个,因为前者负责运行预处理和后处理步骤,而后者默默地忽略它们。

已复制

>>> import torch

>>> from transformers导入AutoTokenizer, BloomForSequenceClassification

>>> tokenizer = AutoTokenizer.from_pretrained( “bigscience/bloom-560m” )

>>> model = BloomForSequenceClassification.from_pretrained( “bigscience/bloom-560m” )

>>> inputs = tokenizer( "你好,我的狗很可爱" , return_tensors= "pt" )

>>>与torch.no_grad():

... logits = model(**inputs).logits

>>> predicted_class_id = logits.argmax().item()

>>> # 要在 `num_labels` 类上训练模型,您可以将 `num_labels=num_labels` 传递给 `.from_pretrained(...)`

>>> num_labels = len (model.config.id2label)

>>> model = BloomForSequenceClassification.from_pretrained( "bigscience/bloom-560m" , num_labels=num_labels)

>>> labels = torch.tensor([ 1 ])

>>> loss = 模型(**inputs, labels=labels).loss

已复制

>>> import torch

>>> from transformers导入AutoTokenizer, BloomForSequenceClassification

>>> tokenizer = AutoTokenizer.from_pretrained( "bigscience/bloom-560m" )

>>> model = BloomForSequenceClassification.from_pretrained( "bigscience/bloom-560m" , problem_type= "multi_label_classification" )复制代码

>>> inputs = tokenizer( "你好,我的狗很可爱" , return_tensors= "pt" )

>>>与torch.no_grad():

... logits = model(**inputs).logits

>>> predicted_class_ids = torch.arange( 0 , logits.shape[- 1 ])[torch.sigmoid(logits).squeeze(dim= 0 ) > 0.5 ]

>>> # 要在 `num_labels` 类上训练模型,您可以将 `num_labels=num_labels` 传递给 `.from_pretrained(...)`

>>> num_labels = len (model.config.id2label)

>>> model = BloomForSequenceClassification.from_pretrained(

... "bigscience/bloom-560m" , num_labels=num_labels, problem_type= "multi_label_classification"

... )

>>>标签 = 火炬。sum (

... torch.nn.functional.one_hot(predicted_class_ids[ None , :].clone(), num_classes=num_labels), dim= 1

... ).to(torch.float ) >>>

loss = model( **输入,标签=标签)。损失

BloomForToken分类

类 变压器。BloomForToken分类

( 配置:BloomConfig )

参数

- config ( BloomConfig ) — 具有模型所有参数的模型配置类。使用配置文件初始化不会加载与模型关联的权重,只会加载配置。查看from_pretrained()方法加载模型权重。

Bloom Model with a token classification head on top (a linear layer on top of the hidden-states output) eg for Named-Entity-Recognition (NER) 任务。

该模型继承自PreTrainedModel。检查超类文档以了解库为其所有模型实现的通用方法(例如下载或保存、调整输入嵌入的大小等)

这个模型也是 PyTorch torch.nn.Module 的子类。将其用作常规 PyTorch 模块,并参考 PyTorch 文档以了解与一般用法和行为相关的所有事项。

向前

( input_ids : typing.Optional[torch.LongTensor] = Nonepast_key_values : typing.Union[typing.Tuple[typing.Tuple[torch.Tensor, torch.Tensor], …], NoneType] = Noneattention_mask : typing.Optional [torch.Tensor] = 无head_mask : typing.Optional[torch.Tensor] = Noneinputs_embeds : typing.Optional[torch.Tensor] = Nonelabels : typing.Optional[ torch.Tensor ] = None ] = 无output_attentions :打字。可选[bool] = 无output_hidden_ states :打字。可选[bool] =无→ transformers.modeling_outputs.TokenClassifierOutput或tuple(torch.FloatTensor)

参数

- input_ids (

torch.LongTensorof shape(batch_size, input_ids_length)) —input_ids_length=sequence_lengthifpast_key_valuesisNoneelsepast_key_values[0][0].shape[2](sequence_lengthof input past key value states)。词汇表中输入序列标记的索引。如果

past_key_values被使用,只有input_ids那些没有计算过他们的过去应该作为传递input_ids。可以使用AutoTokenizer获取索引。请参阅PreTrainedTokenizer.encode()和 PreTrainedTokenizer。致电()了解详情。

- past_key_values (

Tuple[Tuple[torch.Tensor]]of lengthconfig.n_layers) — 包含由模型计算的预先计算的隐藏状态(注意块中的键和值)(见past_key_values下面的输出)。可用于加速顺序解码。过去input_ids赋予此模型的 不应传递,因为input_ids它们已经被计算过。每个元素

past_key_values都是一个元组(past_key, past_value):- past_key: [batch_size * num_heads, head_dim, kv_length]

- past_value: [batch_size * num_heads, kv_length, head_dim]

- attention_mask (

torch.FloatTensorof shape(batch_size, sequence_length), optional ) — 避免对填充标记索引执行注意力的掩码。在以下位置选择的掩码值[0, 1]:- 1 对于未屏蔽的标记,

- 0 for tokens that are masked.

- head_mask (

torch.FloatTensorof shape(num_heads,)or(num_layers, num_heads), optional) — Mask to nullify selected heads of the self-attention modules. Mask values selected in[0, 1]:- 1 indicates the head is not masked,

- 0 indicates the head is masked.

- inputs_embeds (

torch.FloatTensorof shape(batch_size, sequence_length, hidden_size), optional) — Optionally, instead of passinginput_idsyou can choose to directly pass an embedded representation. This is useful if you want more control over how to convertinput_idsindices into associated vectors than the model’s internal embedding lookup matrix.If

past_key_valuesis used, optionally only the lastinputs_embedshave to be input (seepast_key_values). - use_cache (

bool, optional) — If set toTrue,past_key_valueskey value states are returned and can be used to speed up decoding (seepast_key_values). - output_attentions (

bool, optional) — Whether or not to return the attentions tensors of all attention layers. Seeattentionsunder returned tensors for more detail. - output_hidden_states (

bool, optional) — Whether or not to return the hidden states of all layers. Seehidden_statesunder returned tensors for more detail. - return_dict (

bool, optional) — Whether or not to return a ModelOutput instead of a plain tuple. - labels (

torch.LongTensorof shape(batch_size,), optional) — Labels for computing the sequence classification/regression loss. Indices should be in[0, ..., config.num_labels - 1]. Ifconfig.num_labels == 1a regression loss is computed (Mean-Square loss), Ifconfig.num_labels > 1a classification loss is computed (Cross-Entropy).

Returns

transformers.modeling_outputs.TokenClassifierOutput or tuple(torch.FloatTensor)

A transformers.modeling_outputs.TokenClassifierOutput or a tuple of torch.FloatTensor (if return_dict=False is passed or when config.return_dict=False) comprising various elements depending on the configuration (BloomConfig) and inputs.

- loss (

torch.FloatTensorof shape(1,), optional, returned whenlabelsis provided) — Classification loss. - logits (

torch.FloatTensorof shape(batch_size, sequence_length, config.num_labels)) — Classification scores (before SoftMax). - hidden_states (

tuple(torch.FloatTensor), optional, returned whenoutput_hidden_states=Trueis passed or whenconfig.output_hidden_states=True) — Tuple oftorch.FloatTensor(one for the output of the embeddings, if the model has an embedding layer, + one for the output of each layer) of shape(batch_size, sequence_length, hidden_size).Hidden-states of the model at the output of each layer plus the optional initial embedding outputs.

- attentions (

tuple(torch.FloatTensor), optional, returned whenoutput_attentions=Trueis passed or whenconfig.output_attentions=True) — Tuple oftorch.FloatTensor(one for each layer) of shape(batch_size, num_heads, sequence_length, sequence_length).注意 softmax 之后的注意权重,用于计算自注意头中的加权平均值。

BloomForTokenClassification转发方法,覆盖__call__特殊方法。

尽管前向传递的配方需要在此函数中定义,但应该Module 在之后调用实例而不是这个,因为前者负责运行预处理和后处理步骤,而后者默默地忽略它们。

已复制

>>>从变压器导入AutoTokenizer,BloomForTokenClassification

>>>导入火炬

>>> tokenizer = AutoTokenizer.from_pretrained( “bigscience/bloom-560m” )

>>> model = BloomForTokenClassification.from_pretrained( “bigscience/bloom-560m” )

>>> inputs = tokenizer(

... "HuggingFace 是一家位于巴黎和纽约的公司" , add_special_tokens= False , return_tensors= "pt"

... )

>>>与torch.no_grad():

... logits = model(**inputs).logits

>>> predicted_token_class_ids = logits.argmax(- 1 )

>>> # 请注意,标记是分类的而不是输入词,这意味着

>>> # 预测的标记类别可能比单词更多。

>>> # 多个 token 类可能代表同一个词

>>> predicted_tokens_classes = [model.config.id2label[t.item()] for t in predicted_token_class_ids[ 0 ]]

>>> labels = predicted_token_class_ids

>>> loss = model(**inputs, labels=labels).损失

BloomForQuestionAnswering

类 变压器。BloomForQuestionAnswering

( 配置 )

参数

- config ( BloomConfig ) — 具有模型所有参数的模型配置类。使用配置文件初始化不会加载与模型关联的权重,只会加载配置。查看from_pretrained()方法加载模型权重。

BLOOM 模型转换器顶部带有跨度分类头,用于抽取式问答任务,例如 SQuAD(隐藏状态输出顶部的线性层,用于计算和span start logits)span end logits。

该模型继承自PreTrainedModel。检查超类文档以了解库为其所有模型实现的通用方法(例如下载或保存、调整输入嵌入的大小等)

这个模型也是 PyTorch torch.nn.Module 的子类。将其用作常规 PyTorch 模块,并参考 PyTorch 文档以了解与一般用法和行为相关的所有事项。

向前

( input_ids : typing.Optional[torch.LongTensor] = 无attention_mask :typing.Optional [torch.FloatTensor ] = None : typing.Optional[torch.FloatTensor] = Nonestart_positions : typing.Optional[torch.LongTensor] = Noneend_positions : typing.Optional[torch.LongTensor] = Noneoutput_attentions : typing.Optional[bool] = Noneoutput_hidden_states : typing.Optional [bool] = Nonereturn_dict : typing.Optional[bool] = None )

参数

- input_ids (

torch.LongTensorof shape(batch_size, input_ids_length)) —input_ids_length=sequence_lengthifpast_key_valuesisNoneelsepast_key_values[0][0].shape[2](sequence_lengthof input past key value states)。词汇表中输入序列标记的索引。如果

past_key_values被使用,只有input_ids那些没有计算过他们的过去应该作为传递input_ids。可以使用AutoTokenizer获取索引。请参阅PreTrainedTokenizer.encode()和 PreTrainedTokenizer。致电()了解详情。

- past_key_values (

Tuple[Tuple[torch.Tensor]]of lengthconfig.n_layers) — 包含由模型计算的预先计算的隐藏状态(注意块中的键和值)(见past_key_values下面的输出)。可用于加速顺序解码。过去input_ids赋予此模型的 不应传递,因为input_ids它们已经被计算过。每个元素

past_key_values都是一个元组(past_key, past_value):- past_key: [batch_size * num_heads, head_dim, kv_length]

- past_value: [batch_size * num_heads, kv_length, head_dim]

- attention_mask (

torch.FloatTensorof shape(batch_size, sequence_length), optional ) — 避免对填充标记索引执行注意力的掩码。在以下位置选择的掩码值[0, 1]:- 1 对于未屏蔽的标记,

- 0 for tokens that are masked.

- head_mask (

torch.FloatTensorof shape(num_heads,)or(num_layers, num_heads), optional) — Mask to nullify selected heads of the self-attention modules. Mask values selected in[0, 1]:- 1 indicates the head is not masked,

- 0 indicates the head is masked.

- inputs_embeds (

torch.FloatTensorof shape(batch_size, sequence_length, hidden_size), optional) — Optionally, instead of passinginput_idsyou can choose to directly pass an embedded representation. This is useful if you want more control over how to convertinput_idsindices into associated vectors than the model’s internal embedding lookup matrix.If

past_key_valuesis used, optionally only the lastinputs_embedshave to be input (seepast_key_values). - use_cache (

bool, optional) — If set toTrue,past_key_valueskey value states are returned and can be used to speed up decoding (seepast_key_values). - output_attentions (

bool, optional) — Whether or not to return the attentions tensors of all attention layers. Seeattentionsunder returned tensors for more detail. - output_hidden_states (

bool, optional) — Whether or not to return the hidden states of all layers. Seehidden_statesunder returned tensors for more detail. - return_dict (

bool, optional) — Whether or not to return a ModelOutput instead of a plain tuple. - start_positions (

torch.LongTensorof shape(batch_size,), optional) — Labels for position (index) of the start of the labelled span for computing the token classification loss. Positions are clamped to the length of the sequence (sequence_length). Position outside of the sequence are not taken into account for computing the loss. - end_positions (

torch.LongTensorof shape(batch_size,), optional ) — 用于计算标记分类损失的标记跨度末端的位置(索引)的标签。位置被限制在序列 (sequence_length) 的长度内。在计算损失时不考虑序列之外的位置。

BloomForQuestionAnswering转发方法,覆盖__call__特殊方法。

尽管前向传递的配方需要在此函数中定义,但应该Module 在之后调用实例而不是这个,因为前者负责运行预处理和后处理步骤,而后者默默地忽略它们。